Yes and No.

Despite having a plethora of high-quality stats trackers for Valorant, you might be wondering why I decided to build one from scratch (of course excluding the API). Well, the answer is pretty simple.

As a Data practitioner and a Netizen, I truly understand the power of data and how easily it could be manipulated and used for malicious intents. I do not want my personal gaming activity to be visible to a high-tech corp nor do I want to have my email inbox and social media bombarded with such gaming ads.

Most of the game trackers require you to install their software in your PC and such software need to be active while you are playing any game. This creates a sense of paranoia about whether that software is secretly sending your personal files to their servers or is secretly mining cryptocurrency.

Being from a tech background, I was quite certain that I could pull this off and that this would be a great personal project to showcase the skills that I have acquired till now.

API and Tech Stack

API

Valorant does not provide direct API access to solo developers like myself but to big tech corporates only. While looking for other ways,I found an unofficial wrapper API hosted by Henrik Dev which checked all the boxes that I wanted from an API. I had to reach out to them via Discord to ask for an API Key as I had been exceeding the free rate limit; which they easily provided.

Django

Django has been the top choice for me to quickly set up a functional web app. With it, I have created a web app that asks the user to log in via Google SSO and register their in-game username; which is validated against the Valorant API then only stored in the system. Users can also view the stats of other fellow players even if they do not wish to register their in-game usernames.

This web app has been hosted on Render and the link to the web app is valorant.deepsonshrestha.com.np.

Supabase & Postgres

Supabase is an open source alternative to Firebase but that was not the reason why I picked this BaaS (Backend as a Service). The main reason was that it was one of the best free Postgres database providers. Supabase provides 500MB of space for the Postgres database and I decided it would be more than sufficient for my project. In Supabase, I created two separate databases each for web and for warehousing purposes.

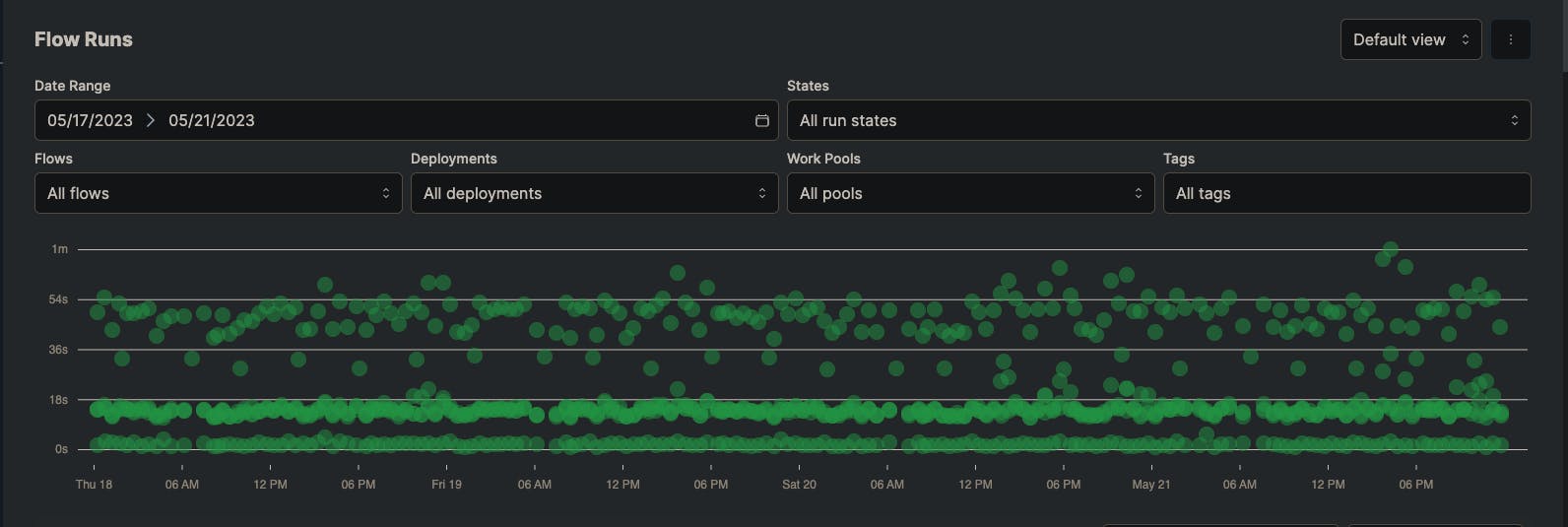

Prefect 2.0

Prefect is a data orchestration tool that allows to manage different data workflows in a centralized place. I used this tool to extract data from both sources (raw data from api and collected user data from Django web app) and load into the warehousing Postgres database. Duplicate data is handled; only fresh data is loaded into the warehousing database.

The API is called for every registered user at regular intervals of 30 minutes and user data is loaded at regular intervals of 4 hours. Such flows are scheduled to run via Github Actions and are reflected on the Prefect Dashboard as well.

dbt

dbt core is the tool that I used to transform the raw data ingested into the warehousing database using SQL. I implemented star schema as the structure for this project because it allowed me to analyze the data much better and easier. I made two seperate environments; one for production and other for testing purpose.

The table powering the dashboard gets refreshed every 4 hours via Github Actions.

Looker Studio

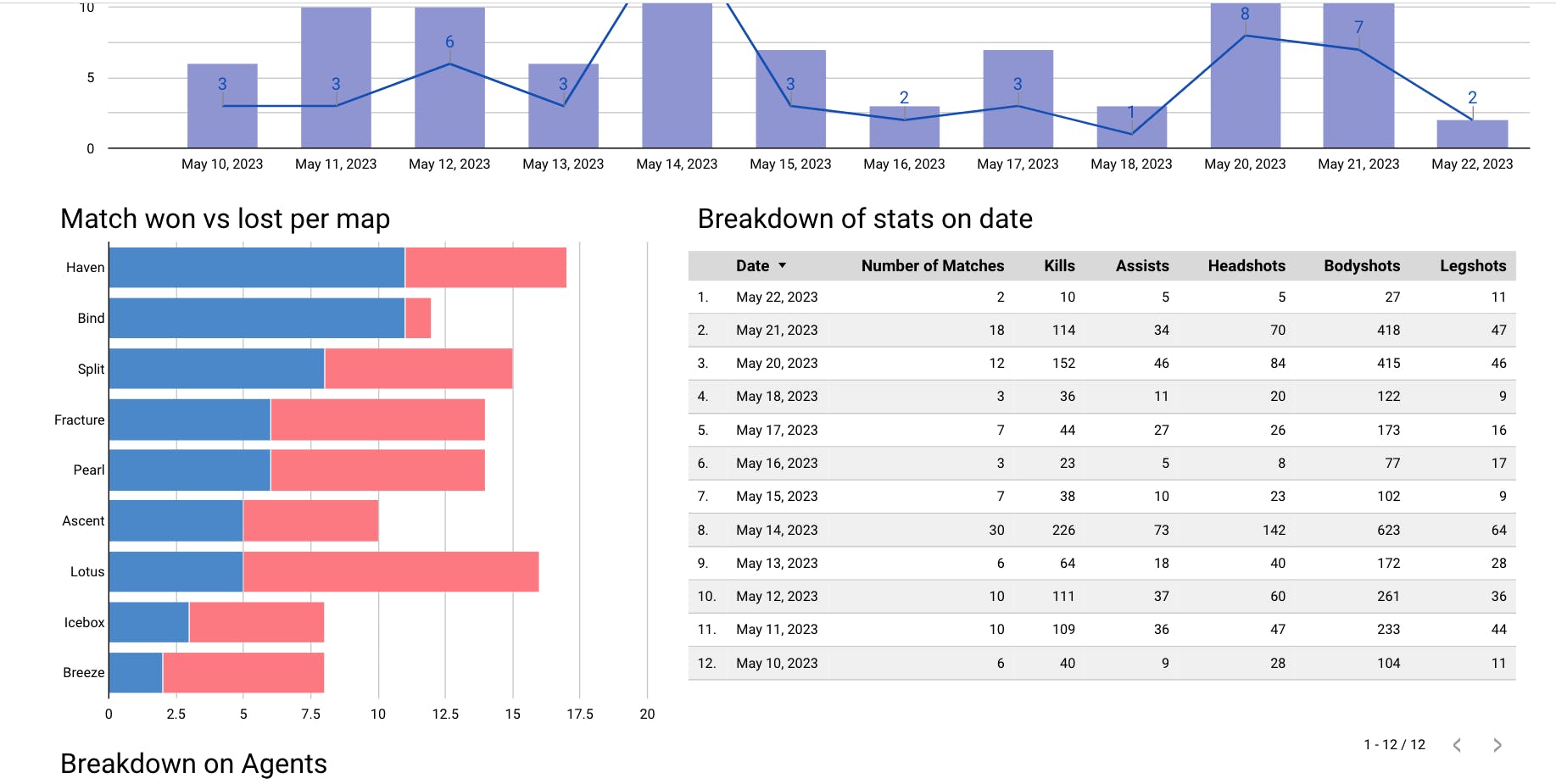

For the final piece of the puzzle, I used Looker Studio to create a functional dashboard by linking the table from the warehouse and embedding it into the Django web app itself.

Thoughts

As me and my friends play Valorant quite frequently, this has allowed us to easily visualize our progress and compare with each other. This dashboard and the processes are far from perfect and I do acknowledge that many crucial insights have been missing from this project. I intend on updating this project in the future as users provide feedback on this.

Thank you for reading my blog. If there are any suggestions or questions please shoot those questions on my Linkedin.